Hybrid Programming: Combining OpenMP with MPI

NOTE: If you plan to incorporate OMPD in an MPI program, some configuration is necessary, in particular, setting the environment variable OMP_DEBUG. See Enabling OpenMP Debugging for detail.

OpenMP shares memory between threads on a single node, while MPI can launch separate tasks and communicate between them across multiple nodes, but does not share memory between nodes. You can combine the use of these two technologies in a hybrid programming model to gain both shared memory and the ability to distribute tasks across multiple cores. In this case, each process is an MPI process, while the threads within those processes may be parallelized using OpenMP.

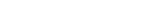

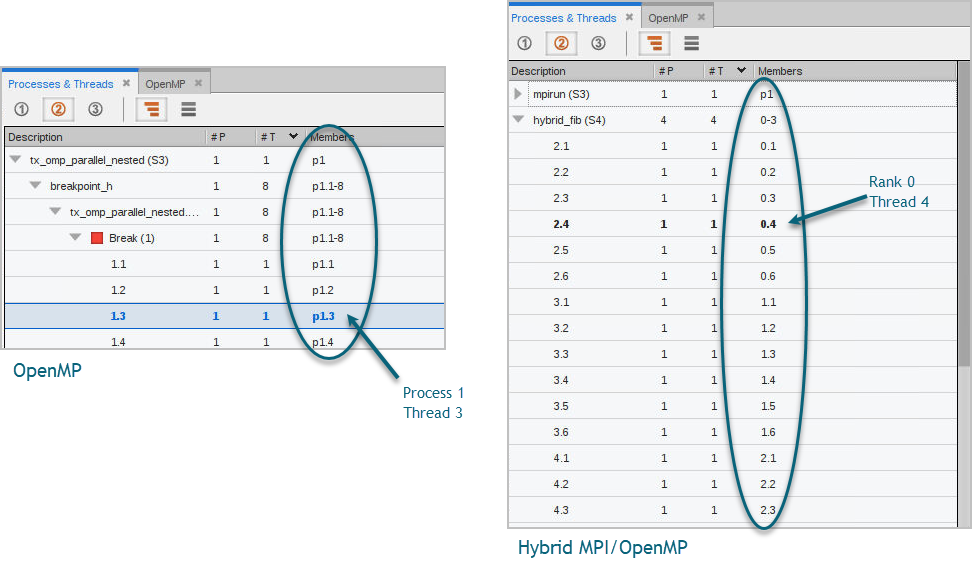

Consider this example. Figure 114 compares the Threads tab in an OpenMP program (left) with one in a hybrid program (right).

Figure 114, Hybrid programming example, Threads tab

The Members tab in the pure OpenMP program displays a single process, identified by the “p1.x” process ID, along with multiple threads. The same tab in the hybrid program displays the MPI rank rather than the process ID, followed by the thread ID.

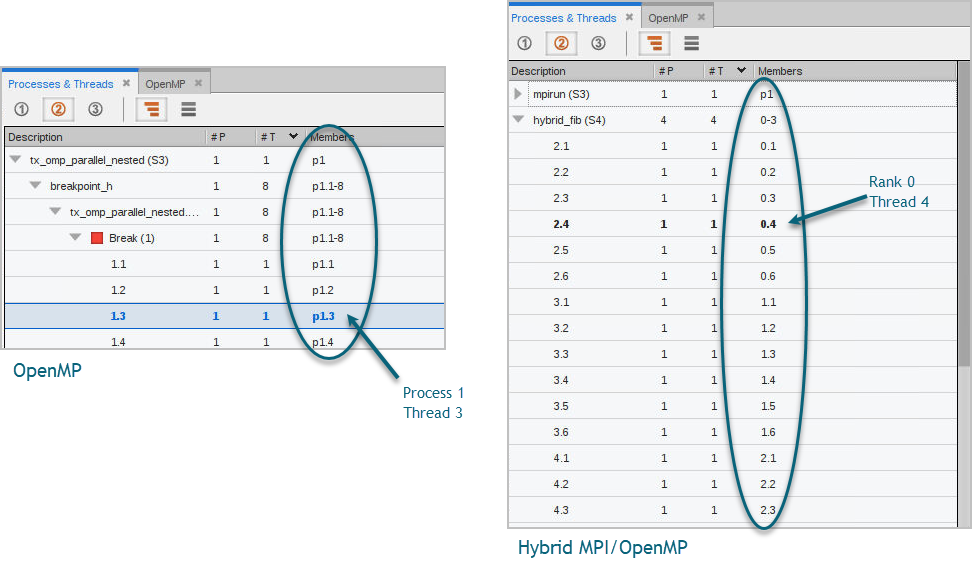

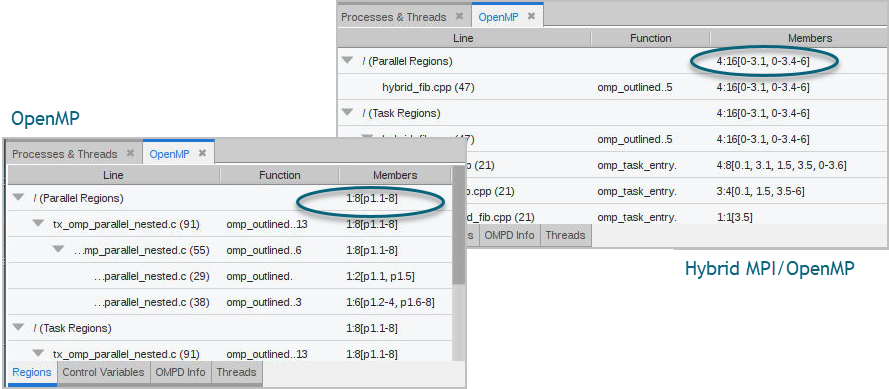

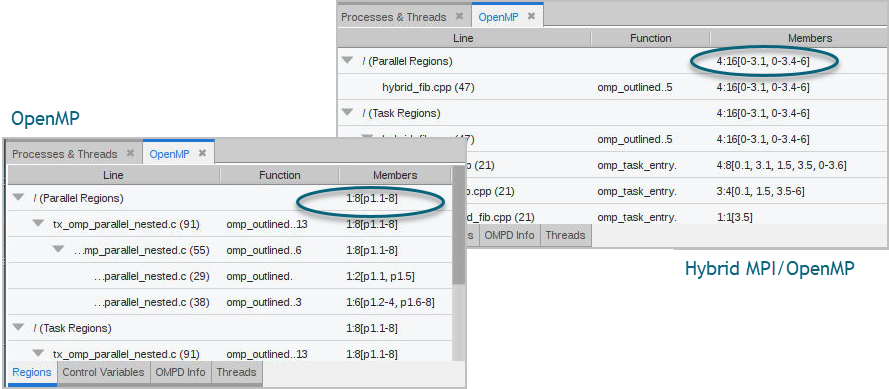

The Regions tab of the OpenMP view also reflects the MPI rank under the Members column, shown in Figure 115.

Figure 115, Hybrid programming example, OpenMP view’s Regions tab

In this example, the hybrid MPI/OpenMP program (top right) has 4 MPI ranks with 16 threads.