Displaying CUDA Program Elements

On this page:

GPU Assembler Display

Due to limitations imposed by NVIDIA, assembler display is not supported. All GPU instructions are currently displayed as 32-bit hexadecimal words.

GPU Variable and Data Display

TotalView can display variables and data from a CUDA thread.

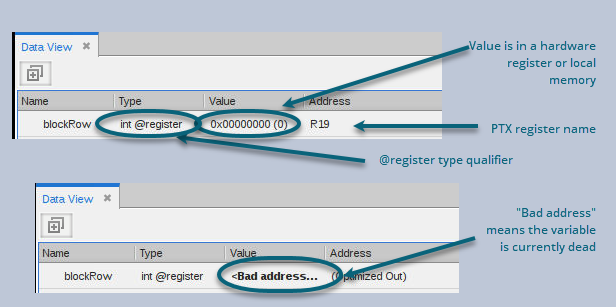

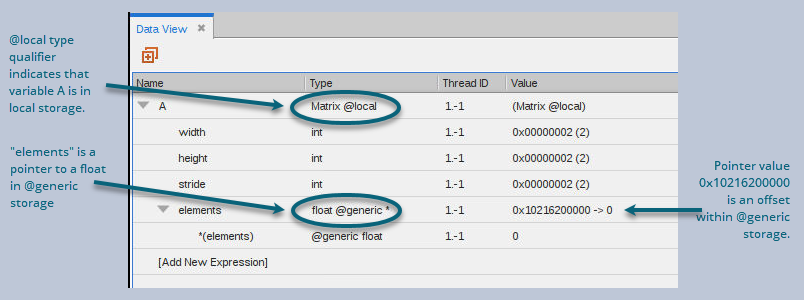

Add an expression from the Call Stack to the Data View to display parameter, register, local, and shared variables, as shown in Figure 134. The variables are contained within the lexical blocks in which they are defined. The type of the variable determines its storage kind (register, or local, shared, constant or global memory). The address is a PTX register name or an offset within the storage kind.

Figure 134. The Data View displaying a parameter

The identifier @local is a TotalView built-in type storage qualifier that tells the debugger the storage kind of "A" is local storage. The debugger uses the storage qualifier to determine how to locate A in device memory. The supported type storage qualifiers are shown in Table 18.

|

Storage Qualifier |

Meaning |

|

@code |

An offset within executable code storage |

|

@constant |

An offset within constant storage |

|

@generic |

An offset within generic storage |

|

@frame |

An offset within frame storage |

|

@global |

An offset within global storage |

|

@local |

An offset within local storage |

|

@parameter |

An offset within parameter storage |

|

@iparam |

Input parameter |

|

@oparam |

Output parameter |

|

@shared |

An offset within shared storage |

|

@surface |

An offset within surface storage |

|

@texsampler |

An offset within texture sampler storage |

|

@texture |

An offset within texture storage |

|

@rtvar |

Built-in runtime variables (see CUDA Built-In Runtime Variables) |

|

@register |

A PTX register name (see PTX Registers) |

|

@sregister |

A PTX special register name (see PTX Registers) |

|

@managed_global |

Statically allocated managed variable. See Managed Memory Variables. |

The type storage qualifier is a necessary part of the type for correct addressing in the debugger. When you edit a type or a type cast, make sure that you specify the correct type storage qualifier for the address offset.

Managed Memory Variables

The CUDA Unified Memory component defines a managed memory space that allows all GPUs and hosts to “see a single coherent memory image with a common address space,” as described in the NVIDIA documentation “Unified Memory Programming.”

Allocating a variable in managed memory avoids explicit memory transfers between host and GPUs, as any allocation created in the managed memory space is automatically migrated between the host and GPU.

A managed memory variable is marked with a "__managed__" memory space specifier.

How TotalView Displays Managed Variables

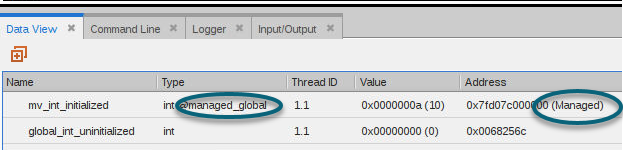

To make it easier to recognize and work with managed variables, TotalView annotates their address with the term “Managed”, and, for statically allocated variables, adds the @managed_global type qualifier.

Statically Allocated Managed Variables

For example, consider this statically allocated managed variable, declared with the __managed__ keyword:

__device__ __managed__ int mv_int_initialized=10;

TotalView decorates the type with @managed_global and adds “(Managed)” to its address. Here, note that the managed variable is identified in these ways, while the regular global is not:

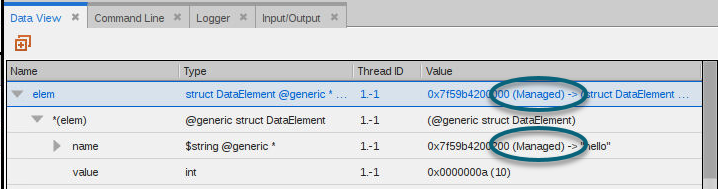

Dynamically Allocated Managed Variables

Managed memory can be dynamically allocated using the cudaMallocManaged() function, for example:

cudaMallocManaged((void**)&(elm->name), sizeof(char) * (strlen("hello") + 1) );

Here, the Data View shows that the variable elem points into managed memory. That is, elem is a pointer and its value points into managed memory; note that the pointer’s value is annotated with "(Managed)".

Note that one of its members, name, also points into managed memory.

CUDA Built-In Runtime Variables

TotalView allows access to the CUDA built-in runtime variables, which are handled by TotalView like any other variables, except that you cannot change their values.

The supported CUDA built-in runtime variables are as follows:

-

struct dim3_16 threadIdx; -

struct dim3_16 blockIdx; -

struct dim3_16 blockDim; -

struct dim3_16 gridDim; -

int warpSize;

The types of the built-in variables are defined as follows:

-

struct dim3_16 { unsigned short x, y, z; }; -

struct dim2_16 { unsigned short x, y; };

You can dive on the name of a runtime variable in the Data View, which creates a new expression. Built-in variables can also be used in the TotalView expression system.

Type Casting

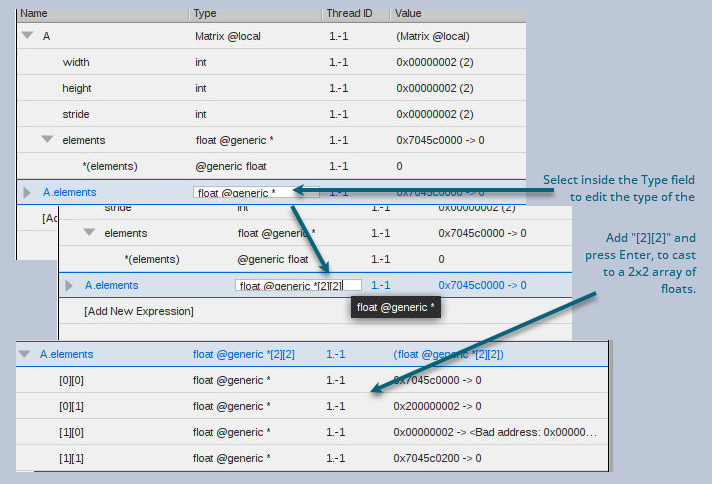

The Data View allows you to edit the types of variables. This is useful for viewing an address as a different type. For example, Figure 135 shows the result of casting a float in generic storage to a 2x2 array of floats in generic storage.

Figure 135. Casting to a 2x2 array of float in local storage

You can determine the storage kind of a variable by diving on the variable to create a new expression in the Data View in the graphical user interface (GUI), or by using the dwhat command in the command line interface (CLI).

Using the CLI to Cast

Here are some examples of using the CLI to determine variable types and to perform type casts.

When you are using the CLI and want to operate on a CUDA thread, you must first focus on the CUDA thread. The GPU focus thread in the CLI is the same as in the GUI:

d1.<> dfocus .-1

d1.-1

d1.-1>

The dwhat command prints the type and address offset or PTX register name of a variable. The dwhat command prints additional lines that have been omitted here for clarity:

d1.-1> dwhat A

In thread 1.-1:

Name: A; Type: @parameter const Matrix; Size: 24 bytes; Addr: 0x00000010

...

d1.-1> dwhat blockRow

In thread 1.-1:

Name: blockRow; Type: @register int; Size: 4 bytes; Addr: %r2

...

d1.-1> dwhat Csub

In thread 1.-1:

Name: Csub; Type: @local Matrix; Size: 24 bytes; Addr: 0x00000060

...

d1.-1>

You can use dprint in the CLI to cast and print an address offset as a particular type. Note that the CLI is a Tcl interpreter, so we wrap the expression argument to dprint in curly braces {} for Tcl to treat it as a literal string to pass into the debugger. For example, below we take the address of "A", which is at 0x10 in parameter storage. Then, we can cast 0x10 to a "pointer to a Matrix in parameter storage", as follows:

d1.-1> dprint {&A}

&A = 0x00000010 -> (Matrix const @parameter)

d1.-1> dprint {*(@parameter Matrix*)0x10}

*(@parameter Matrix*)0x10 = {

width = 0x00000002 (2)

height = 0x00000002 (2)

stride = 0x00000002 (2)

elements = 0x00110000 -> 0

}

d1.-1>

The above "@parameter" type qualifier is an important part of the cast, because without it the debugger cannot determine the storage kind of the address offset. Casting without the proper type storage qualifier usually results in "Bad address" being displayed, as follows:

d1.-1> dprint {*(Matrix*)0x10}

*(Matrix*)0x10 = <Bad address: 0x00000010> (struct Matrix)

d1.-1>

You can perform similar casts for global storage addresses. We know that "A.elements" is a pointer to a 2x2 array in global storage. The value of the pointer is 0x110000 in global storage. You can use C/C++ cast syntax:

d1.-1> dprint {A.elements}

A.elements = 0x00110000 -> 0

d1.-1> dprint {*(@global float(*)[2][2])0x00110000}

*(@global float(*)[2][2])0x00110000 = {

[0][0] = 0

[0][1] = 1

[1][0] = 10

[1][1] = 11

}

d1.-1>

Or you can use TotalView cast syntax, which is an extension to C/C++ cast syntax that allows you to simply read the type from right to left to understand what it is:

d1.-1> dprint {*(@global float[2][2]*)0x00110000}

*(@global float[2][2]*)0x00110000 = {

[0][0] = 0

[0][1] = 1

[1][0] = 10

[1][1] = 11

}

d1.-1>

If you know the address of a pointer and you want to print out the target of the pointer, you must specify a storage qualifier on both the pointer itself and the target type of the pointer. For example, if we take the address of "A.elements", we see that it is at address offset 0x20 in parameter storage, and we know that the pointer points into global storage. Consider this example:

d1.-1> dprint {*(@global float[2][2]*@parameter*)0x20}

*(@global float[2][2]*@parameter*)0x20 = 0x00110000 -> (@global float[2][2])

d1.-1> dprint {**(@global float[2][2]*@parameter*)0x20}

**(@global float[2][2]*@parameter*)0x20 = {

[0][0] = 0

[0][1] = 1

[1][0] = 10

[1][1] = 11

}

d1.-1>

Above, using the TotalView cast syntax and reading right to left, we cast 0x20 to a pointer in parameter storage to a pointer to a 2x2 array of floats in global storage. Dereferencing it once gives the value of the pointer to global storage. Dereferencing it twice gives the array in global storage. The following is the same as above, but this time in C/C++ cast syntax:

d1.-1> dprint {*(@global float(*@parameter*)[2][2])0x20}

*(@global float(*@parameter*)[2][2])0x20 = 0x00110000 -> (@global float[2][2])

d1.-1> dprint {**(@global float(*@parameter*)[2][2])0x20}

**(@global float(*@parameter*)[2][2])0x20 = {

[0][0] = 0

[0][1] = 1

[1][0] = 10

[1][1] = 11

}

d1.-1>

PTX Registers

In CUDA, PTX registers are more like symbolic virtual locations than hardware registers in the classic sense. At any given point during the execution of CUDA device code, a variable that has been assigned to a PTX register may live in one of three places:

-

A hardware (SAS) register

-

Local storage

-

Nowhere (its value is dead)

Variables that are assigned to PTX registers are qualified with the "@register" type storage qualifier, and their locations are PTX register names. The name of a PTX register can be anything, but the compiler usually assigns a name in one of the following formats: %rN, %rdN, or %fN, where N is a decimal number.

Using compiler-generated location information, TotalView maps a PTX register name to the SASS hardware register or local memory address where the PTX register is currently allocated. If the PTX register value is "live", then TotalView shows you the SASS hardware register name or local memory address. If the PTX register value is "dead", then TotalView displays Bad address and the PTX register name as show in Figure 136.

Figure 136. PTX register variables: one live, one dead